Abstract:

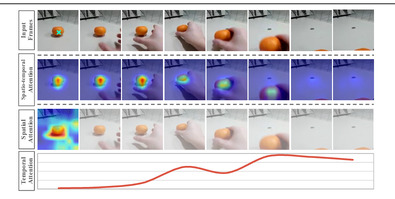

Video alignment aims to match synchronised action information between multiple video sequences. Existing methods are typically based on supervised learning to align video frames according to annotated action phases. However, such phase-level annotation cannot effectively guide frame-level alignment, since each phase can be completed at different speeds across individuals. In this paper, we introduce dynamic warping to take between-video information into account with a new Dynamic Graph Warping Transformer (DGWT) network model. Our approach is the first Graph Transformer framework designed for video analysis and alignment. In particular, a novel dynamic warping loss function is designed to align videos of arbitrary length using attention-level features. A Temporal Segment Graph (TSG) is proposed to enable the adjacency matrix to cope with temporal information in video data. Our experimental results on two public datasets (Penn Action and Pouring) demonstrate significant improvements over state-of-the-art approaches.