Abstract:

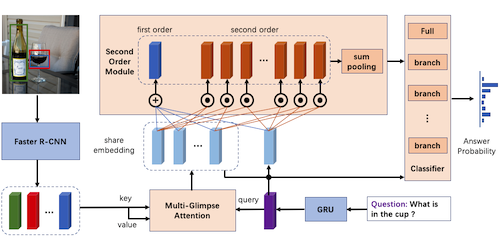

Existing methods on video captioning have made great efforts to identify objects/instances in videos, but few of them emphasize the prediction of action. As a result, the learned models are likely to depend heavily on the prior of training data, such as the co-occurrence of objects, which may cause an enormous divergence between the generated descriptions and the video content. In this paper, we explicitly emphasize the importance of \textit{action} by predicting visually-related syntax components including \textit{subject}, \textit{object} and \textit{predicate}. Specifically, we propose a Syntax-Aware Action Targeting (SAAT) module that firstly builds a self-attended scene representation to draw global dependence among multiple objects within a scene, and then decodes the visually-related syntax components by setting different queries. After targeting the \textit{action}, indicated by \textit{predicate}, our captioner learns an attention distribution over the \textit{predicate} and the previously predicted words to guide the generation of the next word. Comprehensive experiments on MSVD and MSR-VTT datasets demonstrate the efficacy of the proposed model.