Abstract:

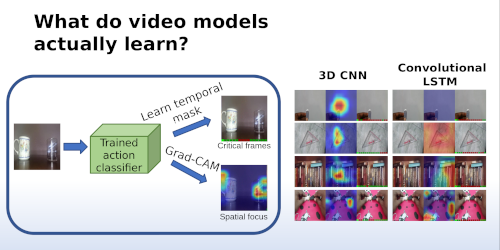

Predicting actions from partially observed videos is challenging as the partial videos containing incomplete action executions have insufficient discriminative information for classification. Recent progress has been made through enriching the features of the observed video part or generating the features for the unobserved video part, but without explicitly modeling the fine-grained evolution of visual object relations over both space and time. In this paper, we investigate how the interaction and correlation between visual objects evolve and propose a graph growing method to anticipate future object relations from limited video observations for reliable action prediction. There are two tasks in our method. First, we work with spatial-temporal graph neural networks to reason object relations in the observed video part. Then, we synthesize the spatial-temporal relation representation for the unobserved video part via graph node generation and aggregation. These two tasks are jointly learned to enable the anticipated future relation representation informative to action prediction. Experimental results on two action video datasets demonstrate the effectiveness of our method.