Abstract:

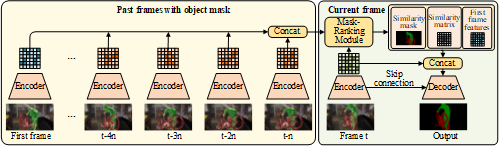

Time-lapse videos usually perform eye-catching appearances but are often hard to create. In this paper, we propose a self-supervised end-to-end model to generate the time-lapse video from a single image and a reference video. Our key idea is to extract both the style and the features of temporal variation from the reference video, and transfer them onto the input image. To ensure both the temporal consistency and realness of our resultant videos, we introduce several novel designs in our architecture, including classwise NoiseAdaIN, flow loss, and the video discriminator. In comparison to the baselines of state-of-the-art style transfer approaches, our proposed method is not only efficient in computation but also able to create more realistic and temporally smooth time-lapse video of a still image, with its temporal variation consistent to the reference.