Abstract:

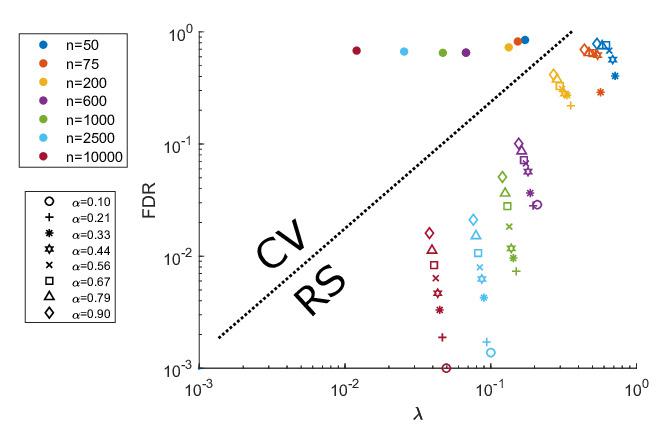

Randomized smoothing is currently the most competitive technique for providing provable robustness guarantees. Since this approach is model-agnostic and inherently scalable we can certify arbitrary classifiers. Despite its success, recent works show that for a small class of i.i.d. distributions, the largest l_p radius that can be certified using randomized smoothing decreases as O(1/d^{1/2-1/p}) with dimension d for p > 2. We complete the picture and show that similar no-go results hold for the l_2 norm for a much more general family of distributions which are continuous and symmetric about the origin. Specifically, we calculate two different upper bounds of the l_2 certified radius which have a constant multiplier of order \Theta(1/d^{1/2}). Moreover, we extend our results to l_p (p>2) certification with spherical symmetric distributions solidifying the limitations of randomized smoothing. We discuss the implications of our results for how accuracy and robustness are related, and why robust training with noise augmentation can alleviate some of the limitations in practice. We also show that on real-world data the gap between the certified radius and our upper bounds is small.