Abstract:

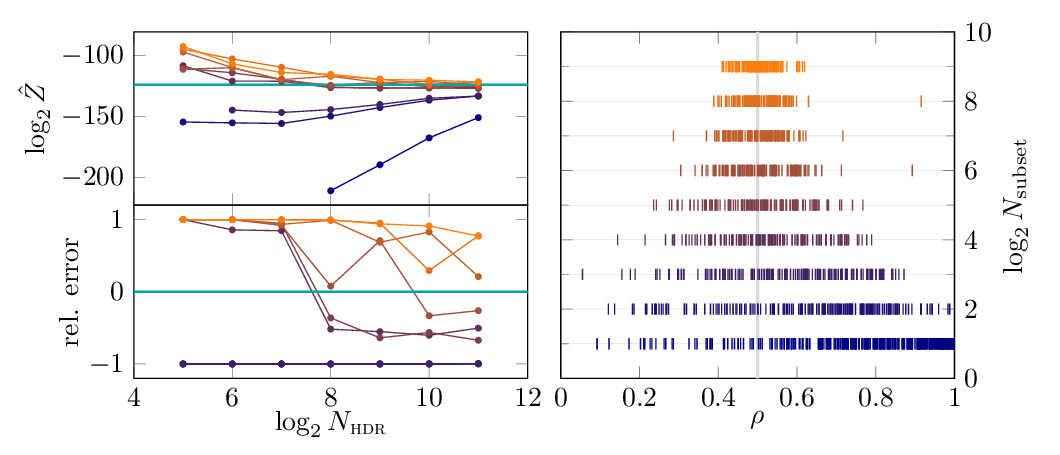

Sliced Stein discrepancy (SSD) and its kernelized variants have demonstrated promising successes in goodness-of-fit tests and model learning in high dimensions. Despite the theoretical elegance, their empirical performance depends crucially on the search of the optimal slicing directions to discriminate between two distributions. Unfortunately, previous gradient-based optimisation approach returns sub-optimal results for the slicing directions: it is computationally expensive, sensitive to initialization, and it lacks theoretical guarantee for convergence. We address these issues in two steps. First, we show in theory that the requirement of using optimal slicing directions in the kernelized version of SSD can be relaxed, validating the resulting discrepancy with finite random slicing directions. Second, given that good slicing directions are crucial for practical performance, we propose a fast algorithm for finding good slicing directions based on ideas of active sub-space construction and spectral decomposition. Experiments in goodness-of-fit tests and model learning show that our approach achieves both the best performance and the fastest convergence. Especially, we demonstrate 14-80x speed-up in goodness-of-fit tests when compared with the gradient-based approach.