Abstract:

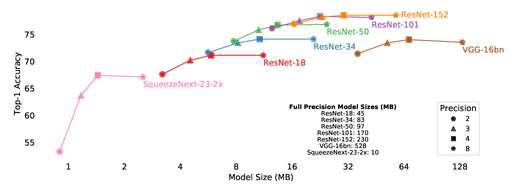

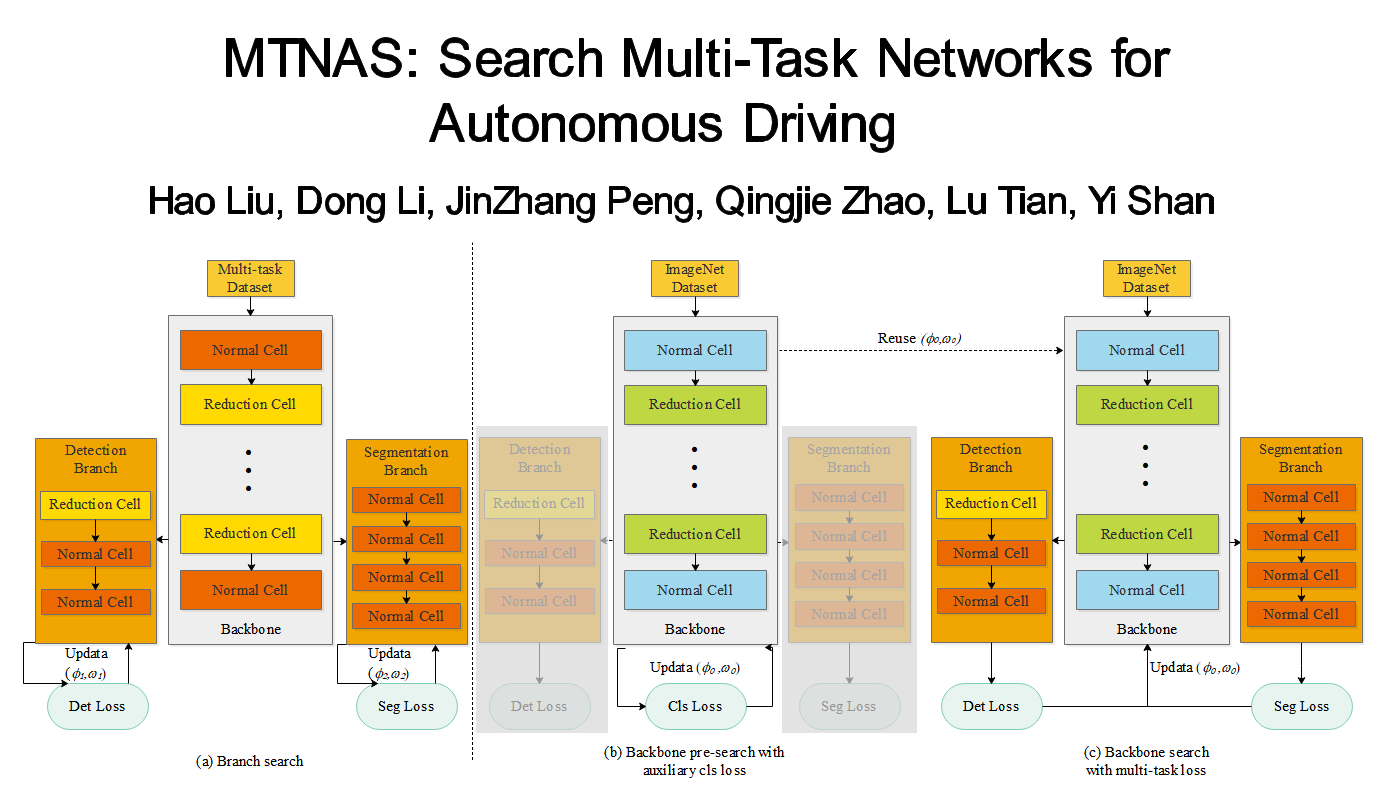

We present APQ, a novel design methodology for efficient deep learning deployment. Unlike previous methods that separately optimize the neural network architecture, pruning policy, and quantization policy, we design to optimize them in a joint manner. To deal with the larger design space it brings, we devise to train a quantization-aware accuracy predictor that is fed to the evolutionary search to select the best fit. Since directly training such a predictor requires time-consuming quantization data collection, we propose to use predictor-transfer technique to get the quantization-aware predictor: we first generate a large dataset of <NN architecture, ImageNet accuracy> pairs by sampling a pretrained unified supernet and doing direct evaluation. then we use these data to train an accuracy predictor without quantization, further transferring its weights to train the quantization-aware predictor, which largely reduces the quantization data collection time. Extensive experiments on ImageNet show the benefits of this joint design methodology: the model searched by our method maintains the same level accuracy as ResNet34 8-bit model while saving 8x BitOps. we obtain the same level accuracy as MobileNetV2+HAQ while achieving 2/1.3 latency/energy saving. the marginal search cost of joint optimization for a new deployment scenario outperforms separate optimizations using ProxylessNAS+AMC+HAQ by 2.3% accuracy while reducing 600x GPU hours and CO2 emission.