Abstract:

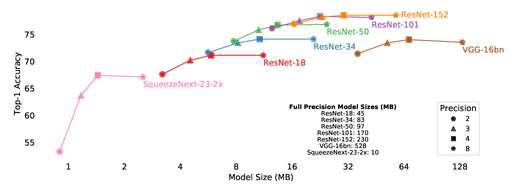

We propose a simple but effective data-driven channel pruning algorithm, which compresses deep neural networks effectively by exploiting the characteristics of operations in a differentiable way. The proposed approach makes a joint consideration of batch normalization (BN) and rectified linear unit (ReLU) for channel pruning; it estimates how likely each feature map is to be deactivated by the two successive operations and prunes the channels that have high probabilities. To this end, we learn differentiable masks for individual channels and make soft decisions throughout the optimization procedure, which allows to explore larger search space and train more stable networks. The proposed formulation combined with the training framework enables us to identify compressed models even without a separate procedure of fine-tuning. We perform extensive experiments and achieve outstanding performance in terms of the accuracy of output networks given the same amount of resources when compared with the state-of-the-art methods.