Abstract:

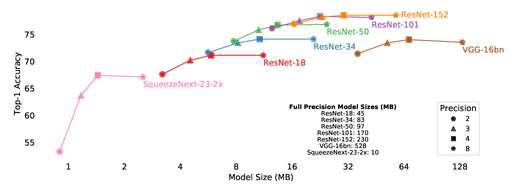

This paper studies the task of budgeted network learning~cite{veniat2018learning} that aims at discovering good convolutional network structures under parameters/FLOPs constraints. Particularly, we present a novel Adaptive End-to-End Network Learning (AdeNeL) approach that enables learning the structures and parameters of networks simultaneously within the budgeted cost in terms of computation and memory consumption. We keep the depth of networks fixed to ensure a fair comparison with the backbones of competitors. Our AdeNeL learns to optimize both the parameters and the number of filters in each layer. To achieve this goal, our AdeNeL utilizes an iterative sparse regularization path -- Discretized Differential Inclusion of Inverse Scale Space (DI-ISS) to measure the capacity of the networks in the training process. Notably, the DI-ISS imports a group of augmented variables to explore the inverse scale space and this group of variables can be used to measure the redundancy of the network. According to the redundancy of the current network, our AdeNeL can choose appropriate operations for current network such as adding more filters. By this strategy, we can control the balance between the computational cost and the model performance in a dynamic way. Extensive experiments on several datasets including MNIST, CIFAR10/100, ImageNet with popular VGG and ResNet backbones validate the efficacy of our proposed method. In specific, on VGG16 backbone, our method on CIFAR10 achieves 92.71% test accuracy with only 0.58M (3.8%) parameters and 43M (13.7%) FLOPs, comparing to 92.90% test accuracy and 14.99 M parameters, 313M FLOPs of the full-sized VGG16 model.