Abstract:

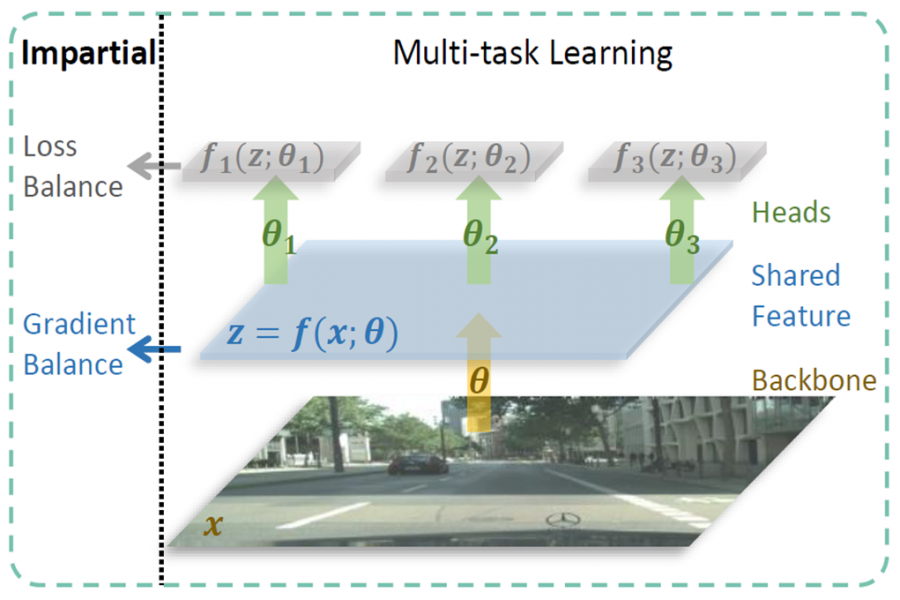

In this work we tackle the multi-person activity recognition problem, where actor detection, tracking, individual action recognition and group activity recognition tasks are jointly solved given an input sequence. Since related works in the literature only deal with parts of the whole problem despite sharing similar architectures, trivial combinations of them result in slow and redundant pipelines and miss the opportunity to leverage inter-task mutual dependency. This motivates us to introduce a novel deep learning model, named TrAct-Net, that can jointly solve all the above tasks in a unified architecture. A new multi-branch CNN in TrAct-Net makes inference efficient and simple, and a novel relation encoder successfully takes both positional and identical relation of detections into consideration to boost both individual action and group activity recognition performances. The whole network is trained end-to-end using a multi-task learning framework. To the best of our knowledge, TrAct-Net is the first end-to-end trainable model to solve the whole problem in a one-shot manner. Experiments on public datasets demonstrate that TrAct-Net achieves superior performance to combinations of state-of-the-arts with much fewer model parameters and faster inference speed.