Abstract:

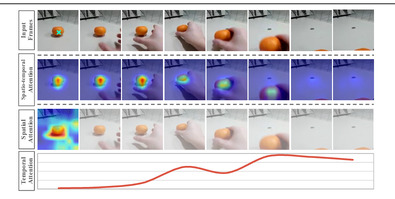

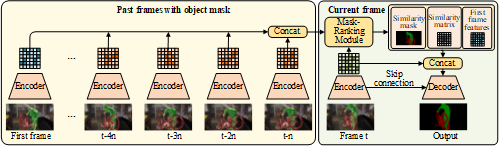

Unsupervised video quantization is to compress the original videos to compact binary codes so that video retrieval can be conducted in an efficient way. In this paper, we make a first attempt to combine quantization method with video retrieval called 3D-UVQ, which obtains high retrieval accuracy with low storage cost. In the proposed framework, we address two main problems: 1) how to design an effective pipeline to perceive video contextual information for video features extraction; and 2) how to quantize these features for efficient retrieval. To tackle these problems, we propose a 3D self-attention module to exploit the spatial and temporal contextual information, where each pixel is influenced by its surrounding pixels. By taking a further recurrent operation, each pixel can finally capture the global context from all pixels. Then, we propose gradient-based residual quantization which consists of several quantization blocks to approximate the features gradually. Extensive experimental results on three benchmark datasets demonstrate that our method significantly outperforms the state-of-the-arts. Ablation study shows that both the 3D self-attention module and the gradient-based residual quantization can improve the performance of retrieval. Our model is publicly available at https://github.com/brownwolf/3D-UVQ.