Abstract:

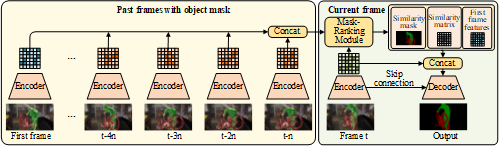

Despite extensive research on cross-modal retrieval, existing methods focus on the matching between image objects and text words. However, for the large amount of social media, such as news reports and online posts with images, previous methods are insufficient to model the associations between long text and image. As long text contains multiple entities and relationships between them, as well as complex events sharing a common scenario of the text, it poses unique research challenge to cross-modal retrieval. To tackle the challenge, in this paper, we focus on the retrieval task on long text and image, and propose an event-driven network for cross-modal retrieval. Our approach consists of two modules, namely the contextual neural tensor network (CNTN) and cross-modal matching network (CMMN). The CNTN module captures both event-level and text-level semantics of the sequential events extracted from a long text. The CMMN module learns a common representation space to compute the similarity of image and text modalities. We construct a multimodal dataset based on the news reports in People’s Daily. The experimental results demonstrate that our model outperforms the existing state-of-the-art methods and can provide semantic richer text representations to enhance the effectiveness in cross-modal retrieval.