Abstract:

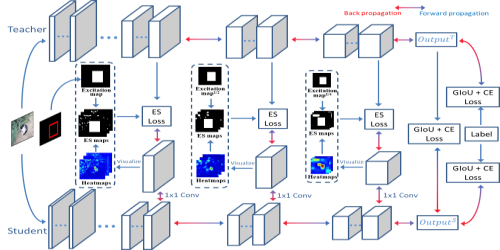

Recent advancements in Generative Adversarial Networks (GANs) enable generating realistic images, which can be possibly misused. Detecting GAN-generated images (GAN-images) become more challenging because of the significant reduction of underlying artifacts and specific patterns. The absence of such traces can hinder detection algorithms to detect GAN-images and transfer knowledge in detecting other types of GAN-images. In this work, we present a robust transferable framework to effectively detect GAN-images, called Transferable GAN-images Detection framework (T-GD). T-GD is composed of a teacher and a student model, which can both iteratively teach and evaluate each other to improve the detection performance. First, we train the teacher model on the source dataset and use it as a starting point for learning the target dataset. For training the student model, we inject noise by mixing up both the source and target dataset, but constrain the weights variation for preserving the starting point. Our approach is a self-training method, but is different from prior approaches by focusing on improving the transferability over GAN-images detection. T-GD achieves a high performance on source dataset, overcoming catastrophic forgetting as well as effectively detecting state-of-the-art GAN-images with only a small volume of data without any metadata information.