Abstract:

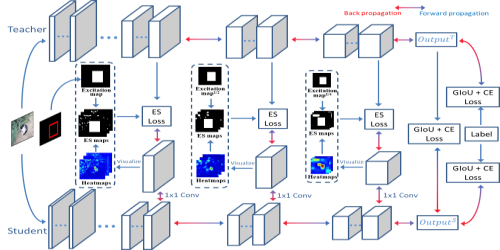

Despite the success of Knowledge Distillation (KD) on image classification, it is still challenging to apply KD on object detection. Due to the uneven distribution of instance-related information, useful knowledge for detection is hard to locate. In this paper, we propose a conditional distillation framework to find the desired knowledge. Specifically, to retrieve useful information related to each target instance, we use the instance information to specify a condition. Given the condition, the retrieval process is conducted by a learnable conditional decoding module, guided by a localization-recognition-sensitive auxiliary task. During decoding, the condition information is encoded as query and the teacher's representation is presented as key. We use the attention between query and key to measure a correlation, which specifies the most related knowledge for distillation. Extensive experiments demonstrate the efficacy of our method: we observe impressive improvements under various settings. Notably, we boost RetinaNet with ResNet-50 backbone from $37.4$ to $40.7$ mAP ($+3.3$) under $1\times$ schedule, that even surpasses the teacher ($40.4$ mAP) with ResNet-101 backbone under $3\times$ schedule.