Abstract:

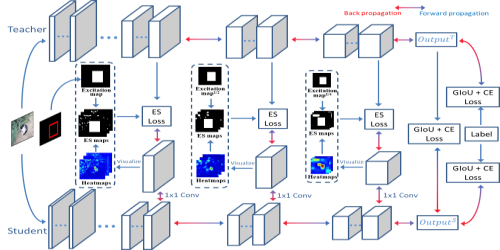

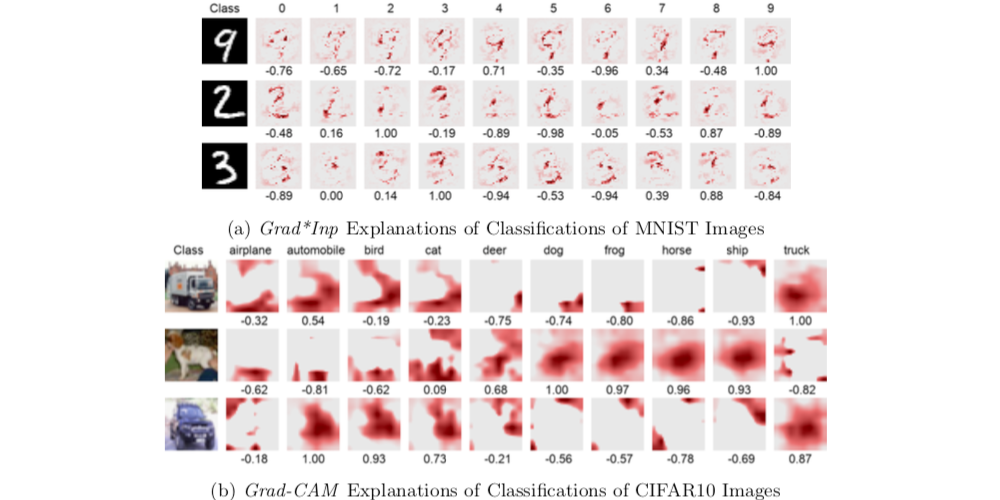

The discriminative knowledge from a high-capacity deep neural network (a.k.a. the "teacher") could be distilled to facilitate the learning efficacy of a shallow counterpart (a.k.a. the "student"). This paper deals with a general scenario reusing the knowledge from a cross-task teacher --- two models are targeting non-overlapping label spaces. We emphasize that the comparison ability between instances acts as an essential factor threading knowledge across domains, and propose the RElationship FacIlitated Local cLassifiEr Distillation (ReFilled) approach, which decomposes the knowledge distillation flow into branches for embedding and the top-layer classifier. In particular, different from reconciling the instance-label confidence between models, ReFilled requires the teacher to reweight the hard triplets push forwarded by the student so that the similarity comparison levels between instances are matched. A local embedding-induced classifier from the teacher further supervises the student's classification confidence. ReFilled demonstrates its effectiveness when reusing cross-task models, and also achieves state-of-the-art performance on the standard knowledge distillation benchmarks. The code of the paper can be accessed at https://github.com/njulus/ReFilled.