Abstract:

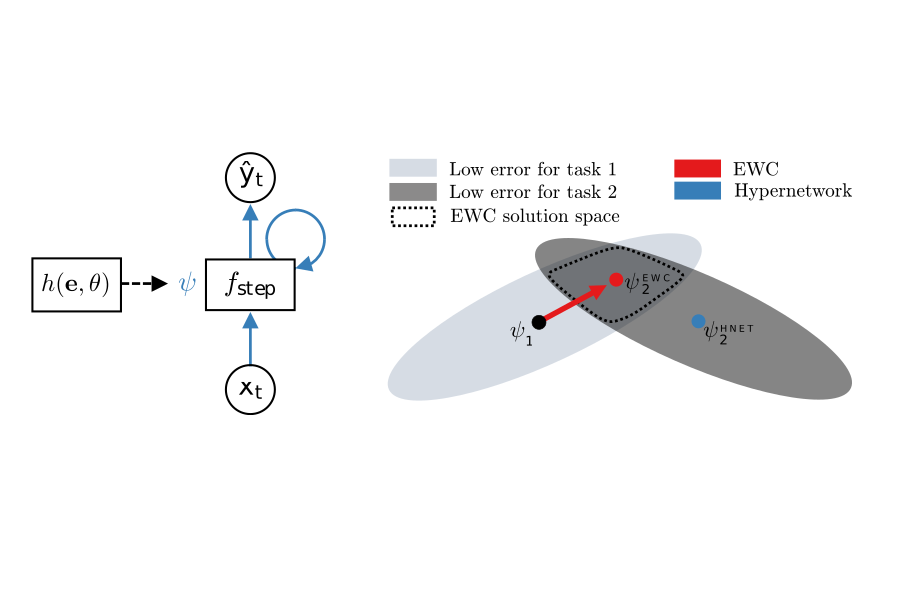

Continual learning (CL) of a sequence of tasks is often accompanied with the catastrophic forgetting(CF) problem. Existing research has achieved remarkable results in overcoming CF, especially for task continual learning. However, limited work has been done to achieve another important goal of CL,knowledge transfer.In this paper, we propose a technique (called BNS) to do both. The novelty of BNS is that it dynamically builds a network to learn each new task to overcome CF and to transfer knowledge across tasks at the same time. Experimental results show that when the tasks are different (with little shared knowledge), BNS can already outperform the state-of-the-art baselines. When the tasks are similar and have shared knowledge, BNS outperforms the baselines substantially by a large margin due to its knowledge transfer capability.