Abstract:

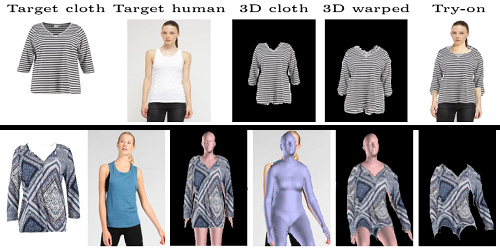

Image-based virtual try-on aims to synthesize the customer image with an in-shop clothes image to acquire seamless and natural try-on results, which have attracted increasing attentions. The main procedures of image-based virtual try-on usually consist of clothes image generation and try-on image synthesis, whereas prior arts cannot guarantee satisfying clothes results when facing large geometric changes and complex clothes patterns, which further deteriorates the afterwards try-on results. To address this issue, we propose a novel virtual try-on network based on landmark-guided shape matching (LM-VTON). Specifically, the clothes image generation progressively learns the warped clothes and refined clothes in an end-to-end manner, where we introduce a landmark-based constraint in Thin-Plate Spline (TPS) warping to inject finer deformation constraints around the clothes. The try-on process synthesizes the warped clothes with personal characteristics via a semantic indicator. Qualitative and quantitative experiments on two public datasets validate the superiority of the proposed method, especially for challenging cases such as large geometric changes and complex clothes patterns. Code will be available at https://github.com/lgqfhwy/LM-VTON.