Abstract:

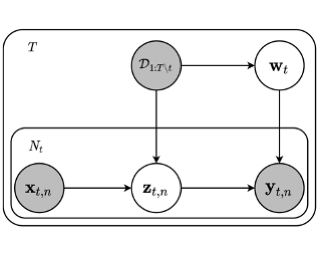

We propose a hypothesis disparity regularized mutual information maximization (HDMI) approach to tackle unsupervised hypothesis transfer---as an effort towards unifying hypothesis transfer learning (HTL) and unsupervised domain adaptation (UDA)---where the knowledge from a source domain is transferred solely through hypotheses and adapted to the target domain in an unsupervised manner. In contrast to the prevalent HTL and UDA approaches that typically use a single hypothesis, HDMI employs multiple hypotheses to leverage the underlying distributions of the source and target hypotheses. To better utilize the crucial relationship among different hypotheses---as opposed to unconstrained optimization of each hypothesis independently---while adapting to the unlabeled target domain through mutual information maximization, HDMI incorporates a hypothesis disparity regularization that coordinates the target hypotheses jointly learn better target representations while preserving more transferable source knowledge with better-calibrated prediction uncertainty. HDMI achieves state-of-the-art adaptation performance on benchmark datasets for UDA in the context of HTL, without the need to access the source data during the adaptation.