Abstract:

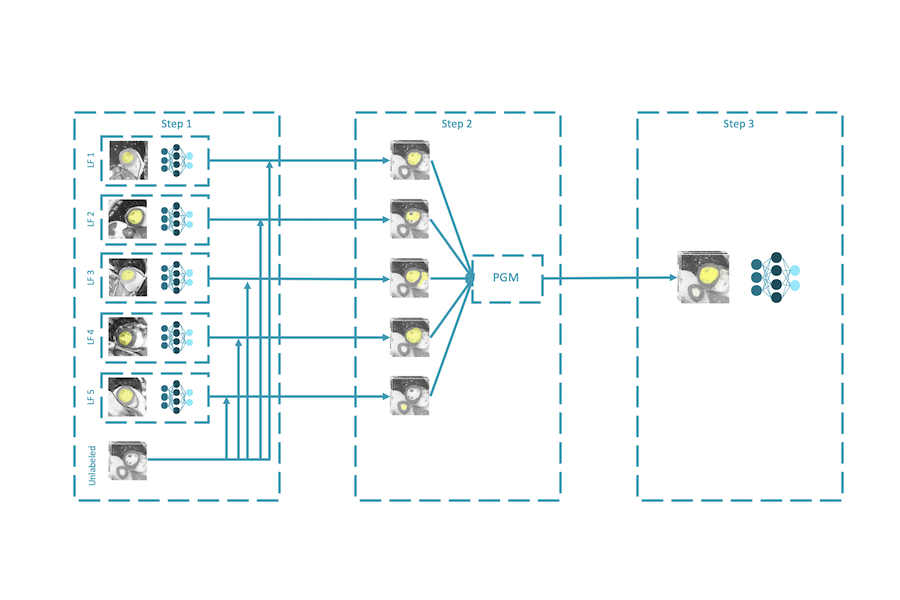

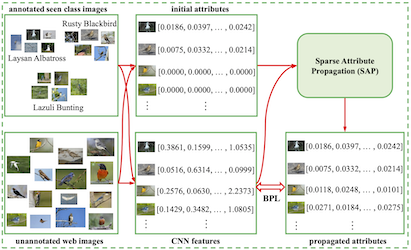

Weakly supervised segmentation is challenging as sparsely labeled pixels do not provide sufficient supervision: A semantic segment may contain multiple distinctive regions whereas adjacent segments may appear similar. Common approaches use the few labeled pixels in all training images to train a segmentation model, and then propagate labels within each image based on visual or feature similarity. Instead, we treat segmentation as a semi-supervised pixel-wise metric learning problem, where pixels in different segments are mapped to distinctive features. Naturally, our unlabeled pixels participate not only in data-driven grouping within each image, but also in discriminative feature learning within and across images. Our results on Pascal VOC and DensePose datasets demonstrate our substantial accuracy gain on various forms of weak supervision including image-level tags, bounding boxes, labeled points, and scribbles.