Abstract:

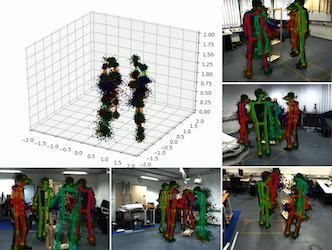

This paper presents a 3D human pose estimation system that uses a stereo pair of 360deg sensors to capture the complete scene from a single location. The approach combines the advantages of omnidirectional capture, the accuracy of multiple view 3D pose estimation and the portability of monocular acquisition. Joint monocular belief maps for joint locations are estimated from 360deg images and are used to fit a 3D skeleton to each frame. Temporal data association and smoothing is performed to produce accurate 3D pose estimates throughout the sequence. We evaluate our system on the Panoptic Studio dataset, as well as real 360deg video for tracking multiple people, demonstrating an average Mean Per Joint Position Error of 124.73mm with 30cm baseline cameras. We also demonstrate improved capabilities over perspective and 360deg multi-view systems when presented with limited camera views of the subject.