Abstract:

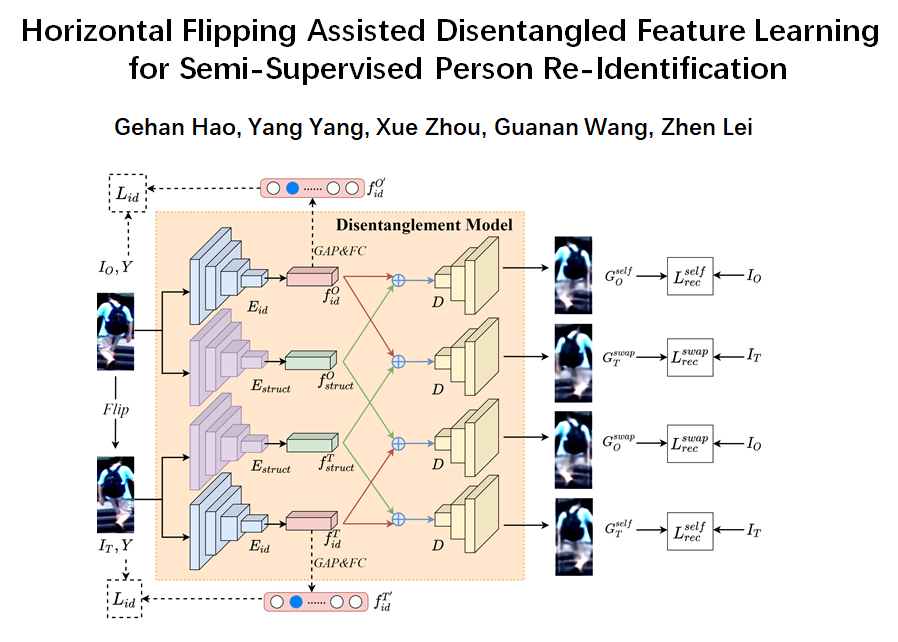

Modern recommenders usually consider both collaborative features from user behavior data (e.g., clicks) and content information about the users and items (e.g., user ages or item images) for improved recommendations. While encouraging, the uncovered user preference representations derived from these collaborative and content-based perspectives can be entangled by intermixing the influence from each other, leading to sub-optimal performance and unstable recommendations. Hence, we propose to disentangle representations learned from user behavior data and content information. Specifically, we propose a novel two-level disentanglement generative recommendation model (DICER) that supports both content-collaborative disentanglement and feature disentanglement: for the content-collaborative disentanglement, DICER decomposes the features by their marginal distributions based on content and user-item interactions, to ensure the learned features from each type are statistically independent. For feature disentanglement, by decomposing the Kullback-Leibler divergence, we theoretically show that extracted features within each type are disentangled at a granular level. Furthermore, DICER utilizes a co-decoder that simultaneously decodes the content and user-item interactions to ensure the high-quality of learned features. Through extensive experiments on three real-world datasets, results show that DICER significantly outperforms other state-of-the-art methods by 13.5