Abstract:

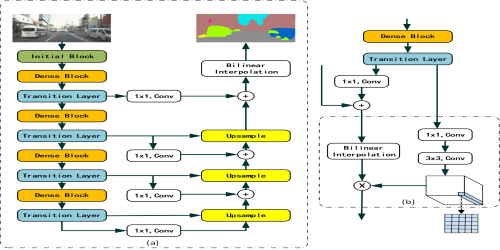

Spatio-temporal (ST) prediction (e.g. crowd flow prediction) is of great importance in a wide range of smart city applications from urban planning, intelligent transportation and public safety. Recently, many deep neural network models have been proposed to make accurate prediction. However, manually designing neural networks requires amount of expert efforts and ST domain knowledge. How to automatically construct a general neural network for diverse spatio-temporal predication tasks in cities? In this paper, we study Neural Architecture Search (NAS) for spatio-temporal prediction and propose an efficient spatio-temporal neural architecture search method, entitled AutoST. To our best knowledge, the search space is an important human prior to the success of NAS in different applications while current NAS models concentrated on optimizing search strategy in the fixed search space. Thus, we design a novel search space tailored for ST-domain which consists of two categories of components: (i) optional convolution operations at each layer to automatically extract multi-range spatio-temporal dependencies; (ii) learnable skip connections among layers to dynamically fuse low- and high-level ST-features. We conduct extensive experiments on four real-word spatio-temporal prediction tasks, including taxi flow and crowd flow, showing that the learned network architectures can significantly improve the performance of representative ST neural network models. Furthermore, our proposed efficient NAS approach searches 8-10x faster than state-of-the-art NAS approaches, demonstrating the efficiency and effectiveness of AutoST.