Abstract:

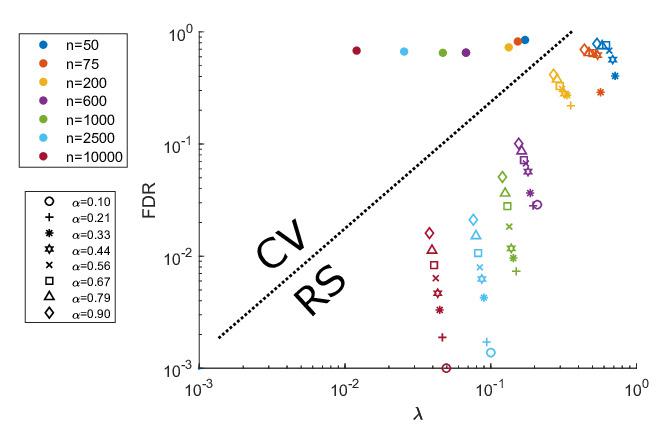

We present a statistically consistent algorithm for constrained classification problems where the objective (e.g. F-measure, G-mean) and the constraints (e.g. demographic parity, coverage) are defined by general functions of the confusion matrix. The key idea is to reduce the problem into a sequence of plug-in classifier learning problems, which is done by formulating an optimization problem over the intersection of the set of achievable confusion matrices and the set of feasible matrices. For objective and constraints that are convex functions of the confusion matrix, our algorithm requires $O(1/\epsilon^2)$ calls to the plug-in routine, which improves on the $O(1/\epsilon^3)$ rate achieved by Narasimhan (2018). We demonstrate empirically that our algorithm performs at least as well as the state-of-the-art methods for these problems.