Abstract:

Despite recent advancements in the field of Deep Reinforcement Learning, Deep Q-network (DQN) models still show lackluster performance on problems with high-dimensional action spaces.

The problem is even more pronounced for cases with high-dimensional continuous action spaces due to a combinatorial increase in the number of the outputs.

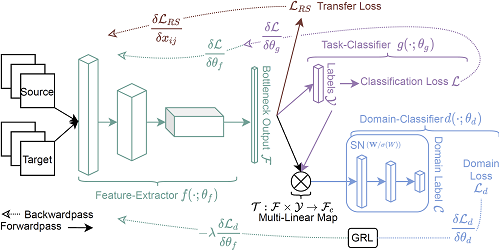

Recent works approach the problem by dividing the network into multiple parallel or sequential (action) modules responsible for different discretized actions.

However, there are drawbacks to both the parallel and the sequential approaches.

Parallel module architectures lack coordination between action modules, leading to extra complexity in the task, while a sequential structure can result in the vanishing gradients problem and exploding parameter space.

In this work, we show that the compositional structure of the action modules has a significant impact on model performance.

We propose a novel approach to infer the network structure for DQN models operating with high-dimensional continuous actions.

Our method is based on the uncertainty estimation techniques introduced in the paper.

Our approach achieves state-of-the-art performance on MuJoCo environments with high-dimensional continuous action spaces.

Furthermore, we demonstrate the improvement of the introduced approach on a realistic AAA sailing simulator game.