Abstract:

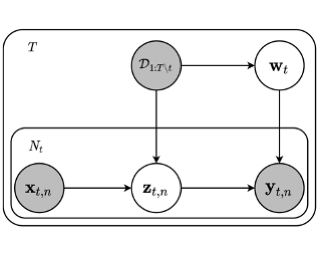

Cooperative multi-agent decision making involves a group of agents collectively solving individual learning problems, while communicating over a (sparse) network with delays. In this paper, we consider the kernelised contextual bandit problem, where the reward obtained by an agent is an arbitrary linear function of the contexts' images in the related reproducing kernel Hilbert space (RKHS), and a group of agents must cooperate to collectively solve their unique decision problems. We propose Coop-KernelUCB that provides near-optimal bounds on the per-agent regret in this setting, and is both computationally and communicatively efficient. For special cases of the cooperative problem, we also provide variants of Coop-KernelUCB that provides optimal per-agent regret. In addition, our algorithm generalizes several existing results in the multi-agent bandit setting. Finally, on a series of both synthetic and real-world multi-agent network benchmarks, our algorithm significantly outperforms existing clustering or consensus-based algorithms, even in the linear setting.