Abstract:

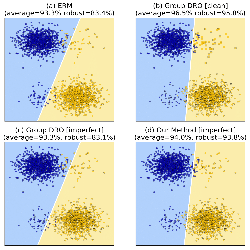

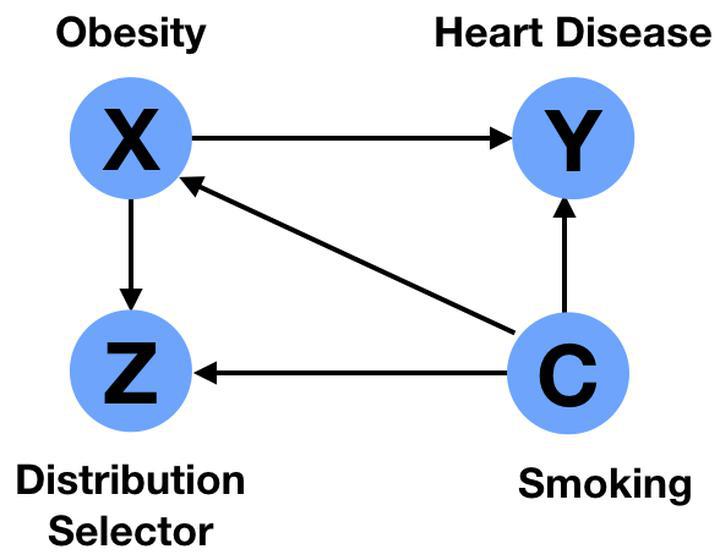

Increasing model capacity well beyond the point of zero training error has been observed to improve average test accuracy. However, such overparameterized models have been recently shown to obtain low worst-group accuracy --- i.e., low accuracy on atypical groups of test examples --- when there are spurious correlations that hold for the majority of training examples. We show on two image datasets that in contrast to average accuracy, overparameterization hurts worst-group accuracy in the presence of spurious correlations. We replicate this surprising phenomenon in a synthetic example and identify properties of the data distribution that induce the detrimental effect of overparameterization on worst-group accuracy. Our analysis leads us to show that a counter-intuitive approach of subsampling the majority group yields high worst-group accuracy in the overparameterized regime, whereas upweighting the minority does not. Our results suggest that when it comes to achieving high worst-group accuracy, there is a tension between using overparameterized models vs. using all of the training data.