Abstract:

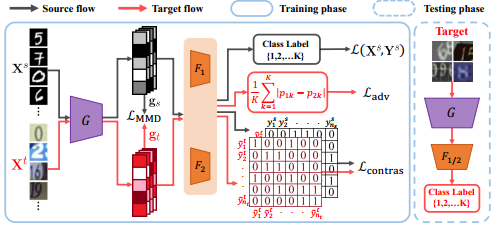

Several images are taken for the same scene with many view directions. Given a pixel in any one image of them, its correspondences may appear in the other images. However, by using existing semantic segmentation methods, we find that the pixel and its correspondences do not always have the same inferred label as expected. Fortunately, from the knowledge of multiple view geometry, if we keep the position of a camera unchanged, and only vary its orientation, there is a homography transformation to describe the relationship of corresponding pixels in such images. Based on this fact, we propose to generate images which are the same as real images of the scene taken in certain novel view directions for training and evaluation. We also introduce gradient guided deformable convolution to alleviate the inconsistency, by learning dynamic proper receptive field from feature gradients. Furthermore, a novel consistency loss is presented to enforce feature consistency. Compared with previous approaches, the proposed method gets significant improvement in both cross-view consistency and semantic segmentation performance on images with abundant view directions, while keeping comparable or better performance on the existing datasets.