Abstract:

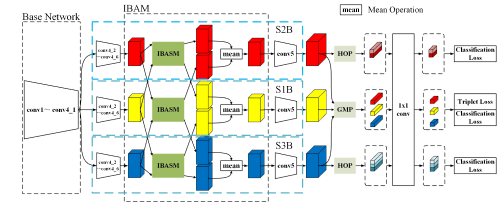

Many existing person re-identification methods have the following two limitations: $emph{(i)}$ They are suffering from missing body parts and occlusion. $emph{(ii)}$ They fail to get diverse visual cues. To handle these problems, we propose a Multi-Granularity Hypergraphs and Adversarial Complementary Learning (MGHACL) method. Specifically, we first uniformly partition the input images into several stripes, which are used to obtain multi-granularity features later. Then we use the proposed MGHACL to learn the high-order relations between these features, which makes the learned features robust, and the complementary information of these features, which contain different visual cues. Next, we integrate learned high-order spatial relations information and complementary information to improve the representation capability of each regional feature. Moreover, we use a supervision strategy to learn to extract more accurate global features. Extensive experiments demonstrate that our method outperforms the state-of-the-art methods on three mainstream datasets, including Market-1501, DukeMTMC-ReID, and CUHK03-NP.