Abstract:

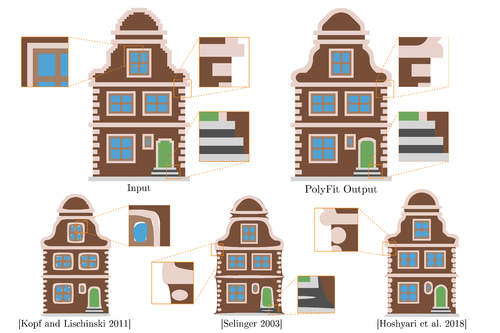

Raster clip-art images, which consist of distinctly colored regions separated by sharp boundaries typically allow for a clear mental vector interpretation. Converting these images into vector format can facilitate compact lossless storage and enable numerous processing operations. Despite recent progress, existing vectorization methods that target such data frequently produce vectorizations that fail to meet viewer expectations. We present PolyFit, a new clip-art vectorization method that produces vectorizations well aligned with human preferences. Since segmentation of such inputs into regions had been addressed successfully, we specifically focus on fitting piecewise smooth vector curves to the raster input region boundaries, a task prior methods are particularly prone to fail on. While perceptual studies suggest the criteria humans are likely to use during mental boundary vectorization, they provide no guidance as to the exact interaction between them; learning these interactions directly is problematic due to the large size of the solution space. To obtain the desired solution, we first approximate the raster region boundaries with coarse intermediate polygons leveraging a combination of perceptual cues with observations from studies of human preferences. We then use these intermediate polygons as auxiliary inputs for computing piecewise smooth vectorizations of raster inputs. We define a finite set of potential polygon to curve primitive maps, and learn the mapping from the polygons to their best fitting primitive configurations from human annotations, arriving at a compact set of local raster and polygon properties whose combinations reliably predict human-expected primitive choices. We use these primitives to obtain a final globally consistent spline vectorization. Extensive comparative user studies show that our method outperforms state-of-the-art approaches on a wide range of data, where our results are preferred three times as often as those of the closest competitor across multiple types of inputs with various resolutions.