Abstract:

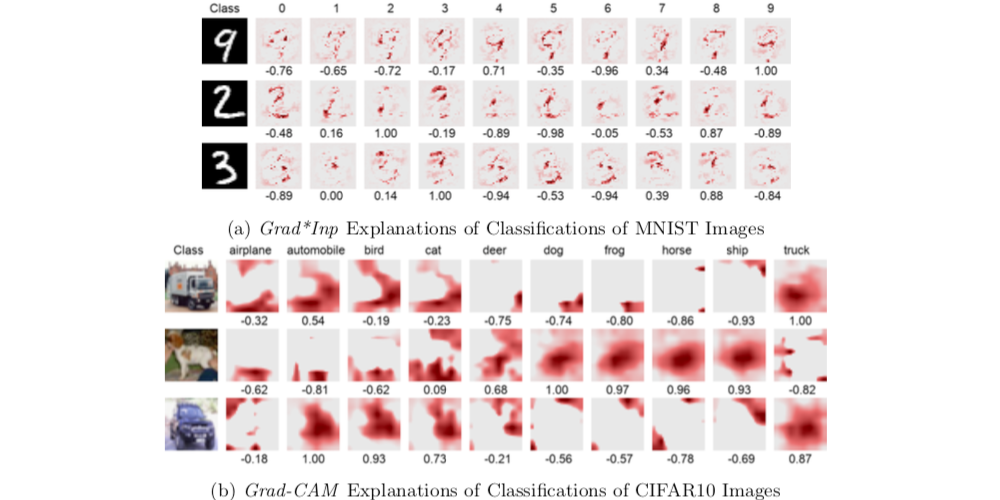

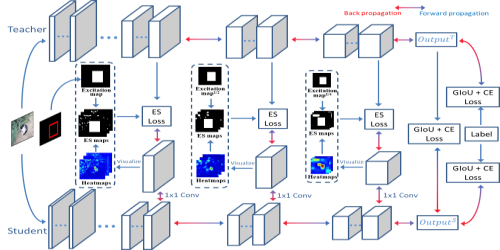

To reduce the overwhelming size of Deep Neural Networks, teacher-student techniques aim to transfer knowledge from a complex teacher network to a simple student network. We instead propose a novel method called the teacher-class network consisting of a single teacher and multiple student networks (class of students). Instead of transferring knowledge to one student only, the proposed method divides learned space into sub-spaces, and each sub-space is learned by a student. Our students are not trained for problem-specific logits; they are trained to mimic knowledge (dense representation) learned by the teacher network; thus, the combined knowledge learned by the class of students can be used to solve other problems. The proposed teacher-class architecture is evaluated on several benchmark datasets such as MNIST, Fashion MNIST, IMDB Movie Reviews, CIFAR-10, and ImageNet on multiple tasks such as image and sentiment classification. Our approach outperforms the state-of-the-art single student approach in terms of accuracy and computational cost while achieving a 10-30 times reduction in parameters. Code is available at https://github.com/musab-r/TCN.