Abstract:

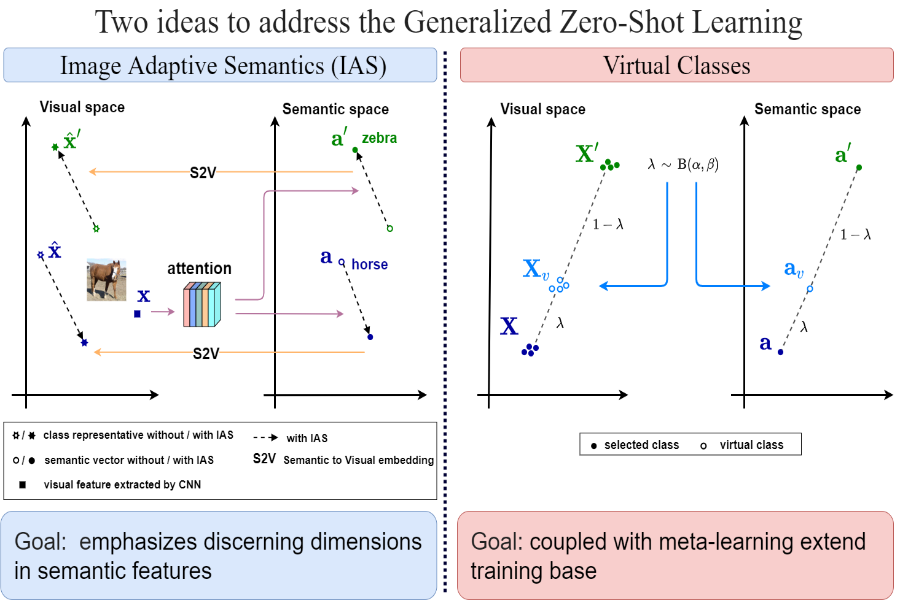

Zero-shot learning and domain generalization strive to overcome the scarcity of task-specific annotated data by individually addressing the issues of semantic and domain shifts, respectively. However, real-world applications often are unconstrained and require handling unseen classes in unseen domains, a setting called zero-shot domain generalization, which presents the issues of domain and semantic shifts simultaneously. Here, we propose a novel approach that learns domain-agnostic structured latent embeddings by projecting images from different domains and their class-specific semantic representations to a common latent space. Our method jointly strives for the following objectives: (i) aligning the multimodal cues from visual and text-based semantic concepts; (ii) partitioning the common latent space according to the domain-agnostic class-level semantic concepts; and (iii) learning a domain invariance w.r.t the visual-semantic joint distribution for generalizing to unseen classes in unseen domains. Our experiments on challenging benchmarks such as DomainNet show the superiority of our approach over existing methods with significant gains on difficult domains like quickdraw and sketch.