Abstract:

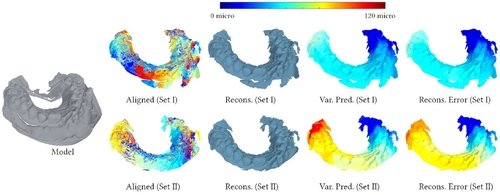

Kidney segmentation from 3D ultrasound images remains a challenging task due to low signal-to-noise ratio and low-contrasted object boundaries. Most of recently proposed segmentation CNNs rely on loss functions where each voxel is treated independently. However, the desired output mask is a high-dimensional structured object. This fails to produce regularly shaped segmentation masks especially in complex cases. In this work, we design a loss function to compare segmentation masks in a feature space designed to describe explicit global shape attributes. We use a Spatial Transformer Network to derive the 3D pose of a mask and we project the resulting aligned mask on a linear sub-space describing the variations across objects. The resulting shape-feature vector is a concatenation of weighted shape rigid pose parameters and non-rigid deformation parameters with respect to a mean shape. We use the L1 function to compare the prediction and the ground truth shape-feature vectors. We validate our method on a large 3D ultrasound kidney segmentation dataset. Using the same U-net Architecture, our loss function outperforms dice and cross entropy standard loss functions used in the nnU-net state-of-the-art approach.