Abstract:

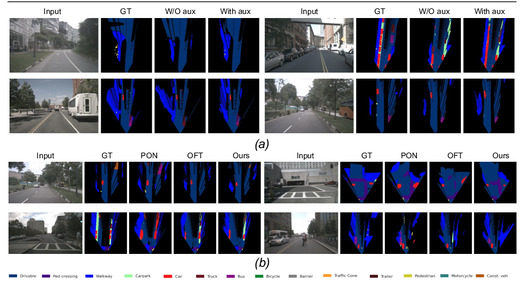

One of the key challenges of today's semantic segmentation approaches is to obtain robust and reliable segmentation results not only in good weather conditions, but also in adverse weather conditions such as darkness, fog or heavy rain. For this purpose, multiple sensor data of several sensor types such as camera and lidar are required to compensate the weather sensitivity of individual sensors. Hence, a semantic segmentation dataset is necessary, which contains camera and lidar data, but until recently, no such dataset exists. Therefore, the ADUULM dataset was created, a semantic segmentation dataset which consists of fine-annotated camera data and pixel-wise labeled lidar data recorded in diverse weather conditions. Additionally, the corresponding GPS, IMU and stereo information are provided, and for each annotated data sample, a short video-sequence is available, too. Furthermore, state-of-the-art semantic segmentation and drivable area detection approaches are evaluated on the proposed dataset, and it turned out that new methods are required to obtain robust and reliable results in adverse weather conditions. The ADUULM-dataset will be available online at https://www.uni-ulm.de/en/in/driveu/projects