Abstract:

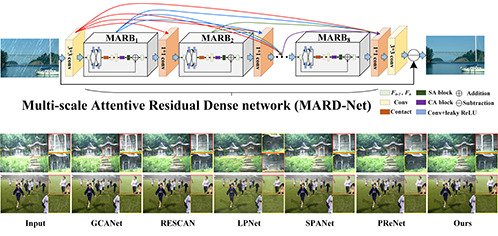

Single image deraining is a fundamental pre-processing step in many computer vision applications for improving the visual effect and analysis performance of subsequent high-level tasks in adverse weather conditions. This study proposes a novel multi-scale residual aggregation network, to effectively solve the single image deraining problem. Specifically, we exploit a lightweight residual structure subnet with less than 10-layers as the deraining backbone network to extract fine and detailed texture context at the original scale, and leverage a multi-scale context aggregation module (MCAM) to augment the complementary semantic context for enhancing the modeling capability of the overall deraining network. The designed MCAM consists of multiple-resolution feature extraction blocks to capture diverse semantic contexts in different expanded receptive fields, and conducts progressive feature fusion between adjacent scales with residual connections, which is expected to concurrently disentangle the multi-scale structures of scene content and multiple rain layers in the rainy images, and capture high-level representative feature for reconstructing the clean image. Moreover, motivated by the fact that the adopted pooling operation and activation function in deep learning may considerably affect the prediction performance in high-level vision tasks such as image classification and object detection, we delve into a generalized pooling and activation method taking into consideration of the surrounding spatial context instead of pixel-wise operation and propose the spatial context-aware pooling (SCAP) and activation (SCAA) for incorporating with our deraining network to boost performance. Extensive experiments on the benchmark datasets demonstrate that our proposed method performs favorably against state-of-the-art (SoTA) deraining approaches.