Abstract:

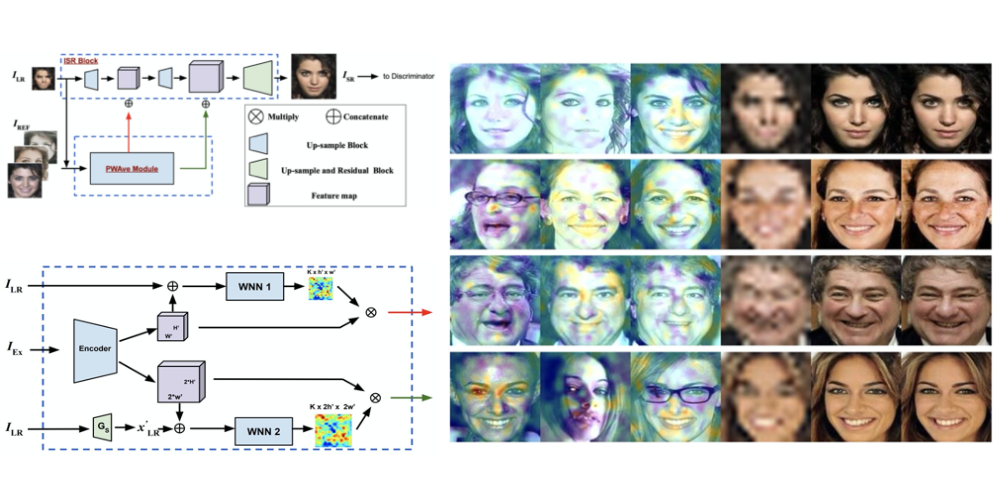

Recent news articles have accused face recognition of being “biased”, “sexist” or “racist”. There is consensus in the research literature that face recognition accuracy is lower for females, who often have both a higher false match rate and a higher false non- match rate. However, there is little published research aimed at identifying the cause of lower accuracy for females. For instance, the 2019 Face Recognition Vendor Test that documents lower female accuracy across a broad range of algorithms and datasets also lists “Analyze cause and effect” under the heading “What we did not do”. We present the first experimental analysis to identify major causes of lower face recognition accuracy for females on datasets where previous research has observed this result. Controlling for equal amount of visible face in the test images reverses the apparent higher false non-match rate for females. Also, principal component analysis indicates that images of two different females are inherently more similar than of two different males, potentially accounting for a difference in false match rates.