Abstract:

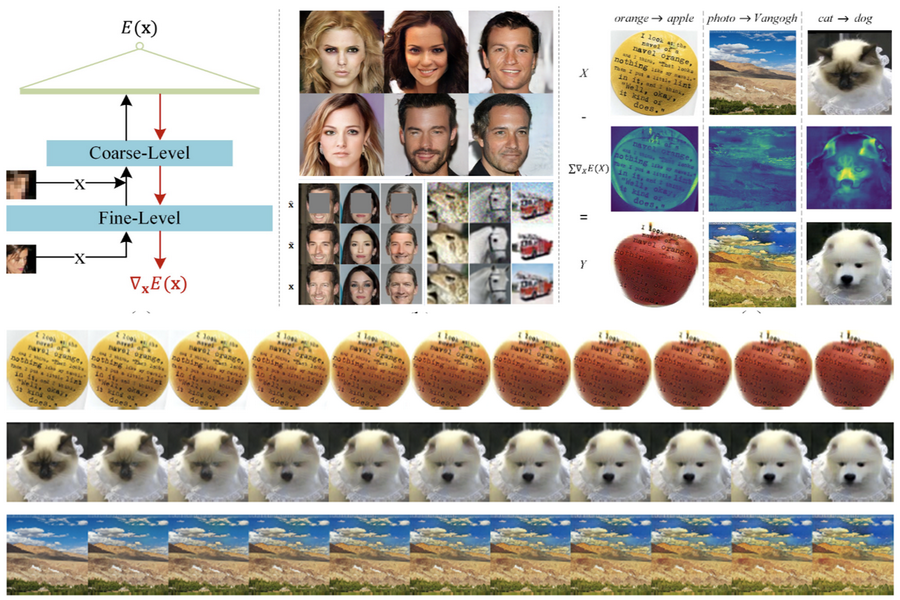

Bilinear pooling has achieved an excellent performance in many computer vision tasks such as fine-grained classification, scene recognition and texture recognition. However, the high-dimension features from bilinear pooling can sometimes be inefficient and prone to over-fitting. Random Maclaurin (RM) is a widely used GPU-friendly approximation method to reduce the dimensionality of bilinear features. However, to achieve good performance, large projection matrices are usually required in practice, making it costly in computation and memory. In this paper, we propose a Shifted Random Maclaurin (SRM) strategy for fast and compact bilinear pooling. With merely negligible extra computational cost, the proposed SRM provides an estimator with a provably smaller variance than RM, which benefits accurate kernel approximation and thus the learning performance. Using a small projection matrix, the proposed SRM achieves a comparable estimation performance as RM based on a large projection matrix, and thus boosts the efficiency. Furthermore, we upgrade the proposed SRM to SRM+ to further improve the efficiency and make the compact bilinear pooling compatible with fast matrix normalization. Fast and Compact Bilinear Network (FCBN) built upon the proposed SRM+ is devised, achieving an end-to-end training. Systematic experiments conducted on four public datasets demonstrate the effectiveness and efficiency of the proposed FCBN.