Abstract:

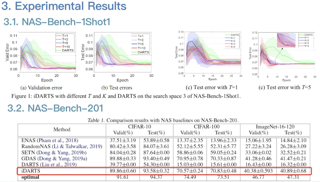

One-Shot architecture search, which aims to explore all possible operations jointly based on a single model, has been an active direction of Neural Architecture Search (NAS). As a well-known one-shot solution, Differentiable Architecture Search (DARTS) performs continuous relaxation on the architecture's importance and results in a bi-level optimization problem. However, as many recent studies have shown, DARTS cannot always work robustly for new tasks, which is mainly due to the approximate solution of the bi-level optimization. In this paper, one-shot neural architecture search is addressed by adopting a directed probabilistic graphical model to represent the joint probability distribution over data and model. Then, neural architectures are searched for and optimized by Gibbs sampling. We rethink the bi-level optimization problem as the task of Gibbs sampling from the posterior distribution, which expresses the preferences for different models given the observed dataset. We evaluate our proposed NAS method -- GibbsNAS on the search space used in DARTS/ENAS and the search space of NAS-Bench-201. Experimental results on multiple search space show the efficacy and stability of our approach.