Abstract:

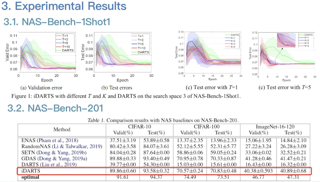

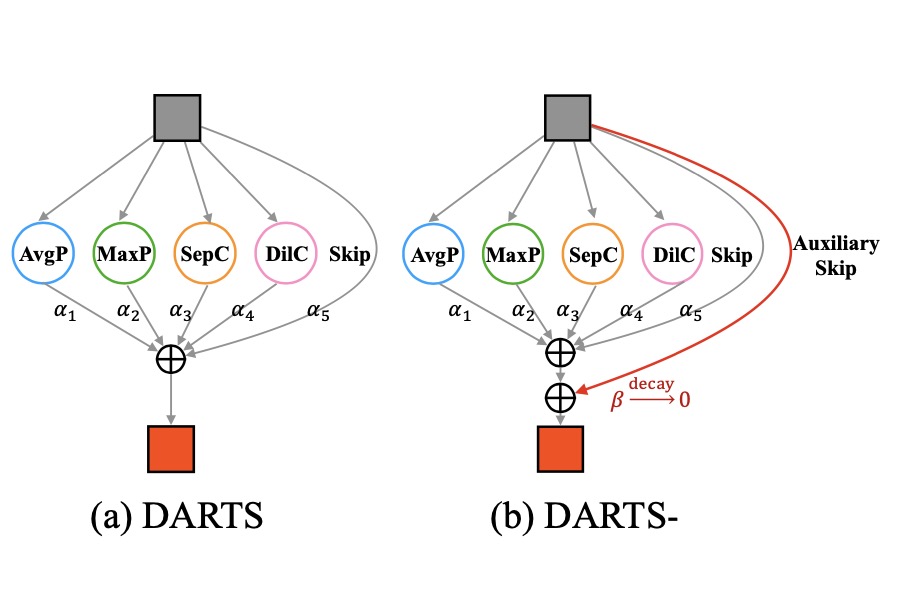

Differentiable architecture search (DARTS) has become the popular method of neural architecture search (NAS) due to its adaptability and low computational cost. However, following the publication of DARTS, it has been found that DARTS often yields a sub-optimal neural architecture because architecture parameters do not accurately represent operation strengths. Through extensive theoretical analysis and empirical observations, we reveal that this issue occurs as a result of the existence of unnormalized operations. Based on our finding, we propose a novel variance-stationary differentiable architecture search (VS-DARTS), which consists of node normalization, local adaptive learning rate, and sqrt(beta)-continuous relaxation. Comprehensively, VS-DARTS makes the architecture parameters a more reliable metric for deriving a desirable architecture without increasing the search cost. In addition to the theoretical motivation behind all components of VS-DARTS, we provide strong experimental results to demonstrate that they synergize to significantly improve the search performance. The architecture searched by VS-DARTS achieves the test error of 2.50% on CIFAR-10 and 24.7% on ImageNet.