Abstract:

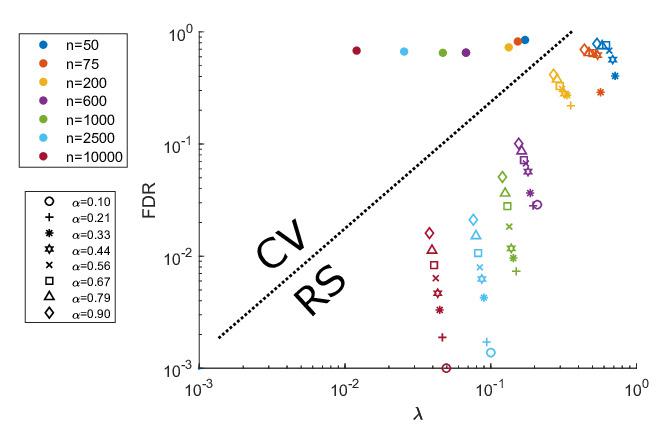

We propose novel coordinate descent (CD) methods for minimizing nonconvex functions comprising three terms: (i) a continuously differentiable term, (ii) a simple convex term, and (iii) a concave and continuous term. First, by extending randomized CD to nonsmooth nonconvex settings, we develop a coordinate subgradient method that randomly updates block-coordinate variables by using block composite subgradient mapping. This method converges asymptotically to critical points with proven sublinear convergence rate for certain optimality measure. Second, we develop a randomly permuted CD method with two alternating steps: linearizing the concave part and cycling through variables. We prove asymptotic convergence to critical points and establish sublinear complexity rate for objectives with both smooth and concave components. Third, we develop a randomized proximal difference-of-convex (DC) algorithm whereby we solve the subproblem inexactly by accelerated coordinate descent (ACD). Convergence is guaranteed with at most a few number of ACD iterations for each DC subproblem, and convergence complexity is established for identifying certain approximate critical points. Fourth, we further develop the third method to minimize smooth and composite weakly convex functions, and show advantages of the proposed method over gradient methods for ill-conditioned nonconvex functions, namely the weakly convex functions with high Lipschitz constant to negative curvature ratios. Finally, an empirical study on sparsity-inducing learning models demonstrates that CD methods are superior to gradient-based methods for certain large-scale problems.