Abstract:

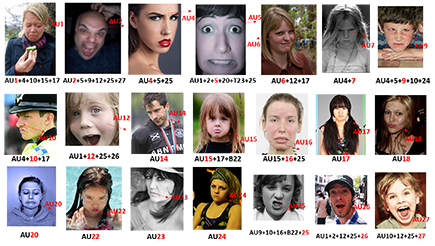

Much of the work on automatic facial expression recognition relies on databases containing a certain number of emotion classes and their exaggerated facial configurations (generally six prototypical facial expressions), based on Ekman's Basic Emotion Theory. However, recent studies have revealed that facial expressions in our human life can be blended with multiple basic emotions. And the emotion labels for these in-the-wild facial expressions cannot easily be annotated solely on pre-defined AU patterns. How to analyze the action units for such complex expressions is still an open question. To address this issue, we develop a RAF-AU database that employs a sign-based (i.e., AUs) and judgement-based (i.e., perceived emotion) approach to annotating blended facial expressions in the wild. We first reviewed the annotation methods in existing databases and identified crowdsourcing as a promising strategy for labeling in-the-wild facial expressions. Then, RAF-AU was finely annotated by experienced coders, on which we also conducted a preliminary investigation of which key AUs contribute most to a perceived emotion, and the relationship between AUs and facial expressions. Finally, we provided a baseline for AU recognition in RAF-AU using popular features and multi-label learning methods.