Abstract:

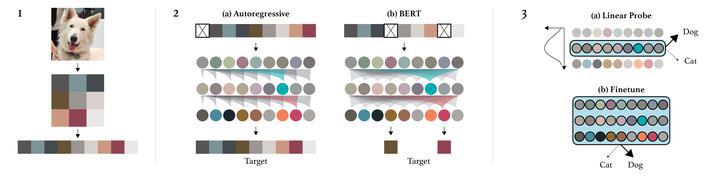

ImageNet pre-training has long been considered crucial by the ne-grained sketch-based image retrieval (FG-SBIR) community due to the lack of large sketch-photo paired datasets for FG-SBIR training. In this paper, we propose a self-supervised alternative for representation pre-training. Specically, we consider the jigsaw puzzle game of recomposing images from shufed parts. We identify two key facets of jigsaw task design that are required for effective FG-SBIR pre-training. The rst is formulating the puzzle in a mixed-modality fashion. Second we show that framing the optimisation as permutation matrix inference via Sinkhorn iterations is more effective than the common classier formulation of Jigsaw self-supervision. Experiments show that this self-supervised pre-training strategy signicantly outperforms the standard ImageNet-based pipeline across all four product-level FG-SBIR benchmarks. Interestingly it also leads to improved cross-category generalisation across both pre-train/ne-tune and ne-tune/testing stages.