Abstract:

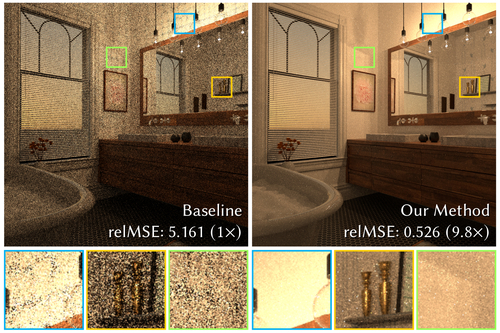

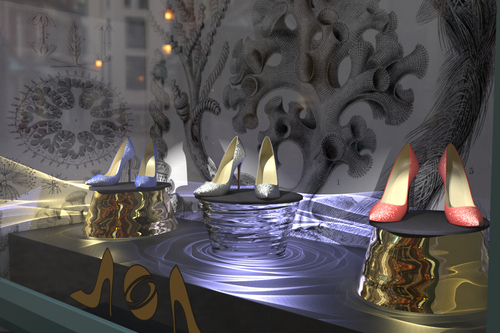

An event camera detects per-pixel intensity difference and produces asynchronous event stream with low latency, high dynamic range, and low power consumption. As a trade-off, the event camera has low spatial resolution. We propose an end-to-end network to reconstruct high resolution, high dynamic range (HDR) images directly from the event stream. We evaluate our algorithm on both simulated and real-world sequences and verify that it captures fine details of a scene and outperforms the combination of the state-of-the-art event to image algorithms with the state-of-the-art super resolution schemes in many quantitative measures by large margins. We further extend our method by using the active sensor pixel (APS) frames or reconstructing images iteratively.