Abstract:

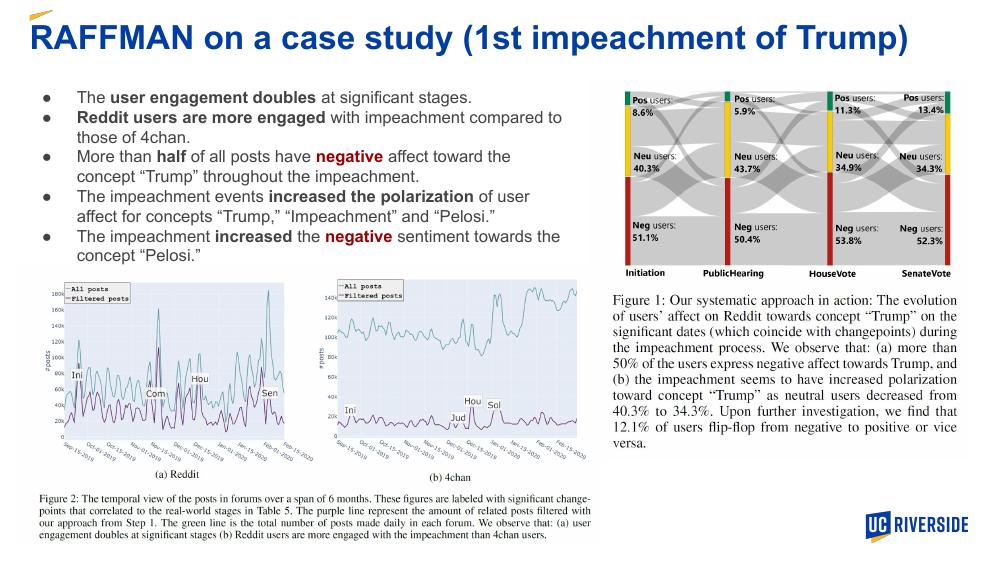

The proliferation of online misinformation has been raising increasing societal concerns about its potential consequences, e.g., polarizing the public, eroding trust in institutions. These consequences are framed under the public´s susceptibility to such misinformation -- a narrative that needs further investigation and quantification. To this end, our paper proposes an observational approach to model and measure expressed (dis)beliefs in (mis)information by leveraging social media comments as a proxy. We collect a sample of tweets in response to misinformation and annotate them with (dis)belief labels, explore the dataset using lexicon-based methods, and finally build classifiers based on the state-of-the-art neural transfer-learning models. Under a domain-specific thresholding strategy, the best-performing unbiased classifier archives macro-F1 scores around 0.86 for disbelief and 0.80 for belief. Applying the classifier, we conduct a large-scale measurement study and show that, overall, 12%--15% social media comments express disbelief and 26%--20% express belief, with the left-bounds representing comments in response to true claims and the right-bounds to false ones. Our results also suggest an extremely slight time effect of falsehood awareness, a positive effect of fact-checks to false claims, and a difference in (dis)belief across social media platforms.