Abstract:

We study the sample complexity of private synthetic data generation over an unbounded sized class of statistical queries, and show that any class that is privately proper PAC learnable admits a private synthetic data generator (perhaps non-efficient). A differentially private synthetic generator is an algorithm that receives an IID data and publishes synthetic data that is indistinguishable from the true data w.r.t a given fixed class of statistical queries. The synthetic data set can then be used by a data scientist without compromising the privacy of the original data set.

Previous work on synthetic data generators focused on the case that the query class $\D$ is finite and obtained sample complexity bounds that scale logarithmically with the size $|\D|$. Here we construct a private synthetic data generator whose sample complexity is independent of the domain size, and we replace finiteness with the assumption that $\D$ is privately PAC learnable (a formally weaker task, hence we obtain equivalence between the two tasks).

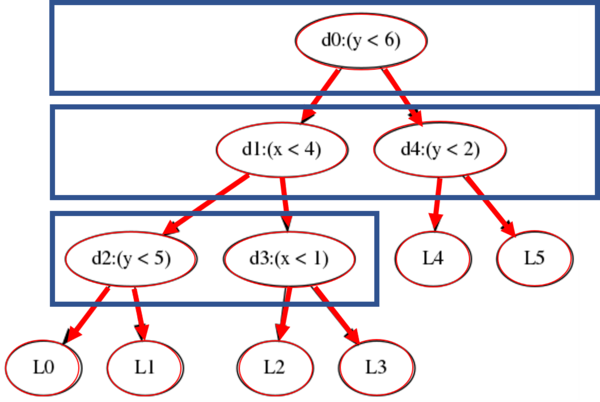

Our proof relies on a new type of synthetic data generator, Sequential Synthetic Data Generators, which we believe may be of interest of their own right. A sequential SDG is defined by a sequential game between a generator that proposes synthetic distributions and a discriminator that tries to distinguish between real and fake distributions. We characterize the classes that admit a sequential-SDG and show that they are exactly Littlestone classes. Given the online nature of the sequential setting, it is natural that Littlestone classes arise in this context. Nevertheless, the characterization of sequential--SDGs by Littlestone classes turns out to be technically challenging, and to the best of the author's knowledge, does not follow via simple reductions to online prediction.