Abstract:

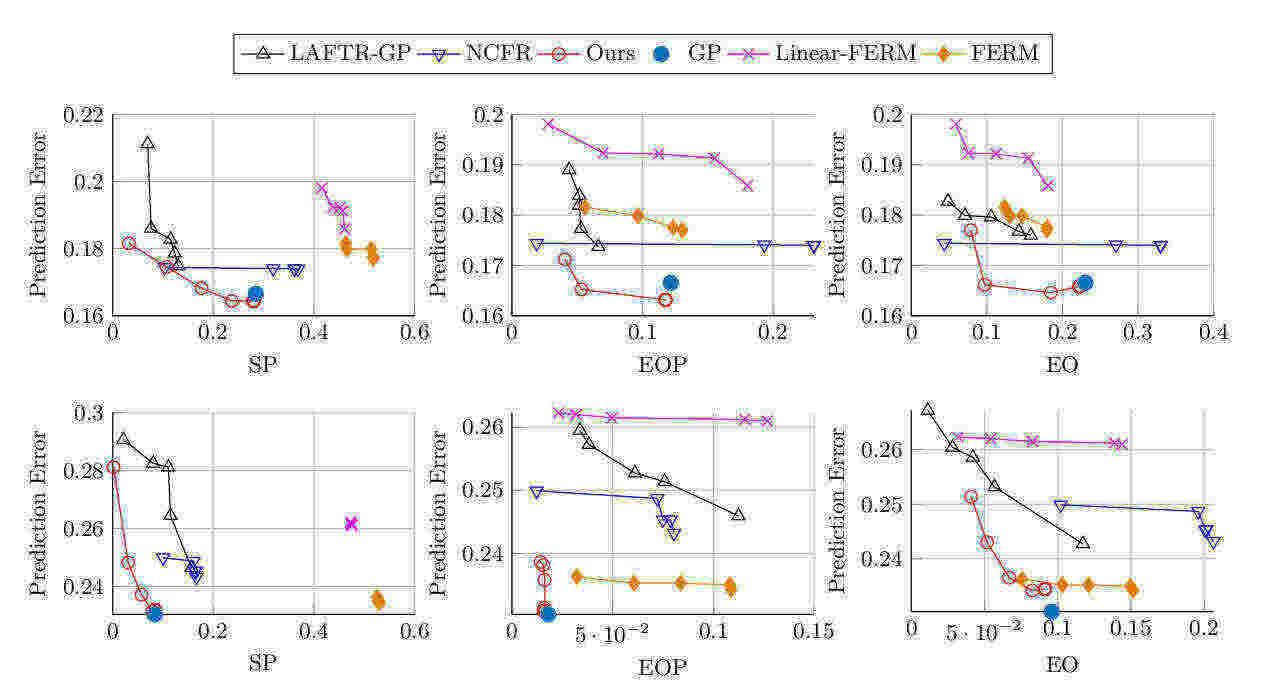

Consequential decisions are increasingly informed by sophisticated data-driven predictive models. However, consistently learning accurate predictive models requires access to ground truth labels. Unfortunately, in practice, labels may only exist conditional on certain decisions---if a loan is denied, there is not even an option for the individual to pay back the loan. In this paper, we show that, in this selective labels setting, learning to predict is suboptimal in terms of both fairness and utility. To avoid this undesirable behavior, we propose to directly learn stochastic decision policies that maximize utility under fairness constraints. In the context of fair machine learning, our results suggest the need for a paradigm shift from 'learning to predict' to 'learning to decide'. Experiments on synthetic and real-world data illustrate the favorable properties of learning to decide, in terms of both utility and fairness.