Abstract:

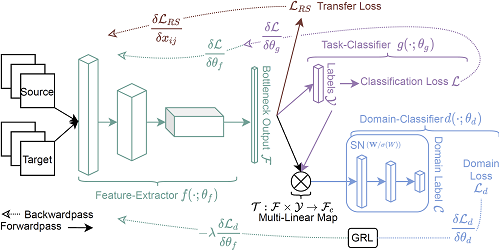

Modern neural networks have proven to be powerful function approximators, providing state of the art performance in a wide variety of applications. They however fall short in their ability to quantify their confidence in their predictions, which can be crucial in high-stakes applications involving critical decision-making. Bayesian neural networks (BNNs) aim to solve this problem by placing a prior distribution over the network parameters, thus inducing a posterior predictive distribution that encapsulates any uncertainty about the prediction. While existing variants of BNNs are able to produce reliable, albeit approximate, uncertainty estimates over in-distribution data, it has been shown that they tend to be over-confident in predictions made on target data whose distribution over features differs from the training data, i.e., the covariate shift setup. In this paper, we develop an approximate Bayesian inference scheme based on posterior regularisation, where we use information from unlabelled target data to produce more appropriate uncertainty estimates for ''covariate-shifted'' predictions. Our regulariser can be easily applied to many of the current network architectures and inference schemes --- here, we demonstrate its usefulness in Monte Carlo Dropout, showing that it much more appropriately quantifies its uncertainty with very little extra work. Empirical evaluations demonstrate that our method performs competitively compared to Bayesian and frequentist approaches to uncertainty estimation in neural networks.