Abstract:

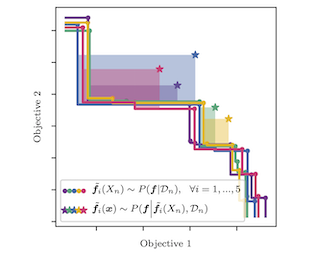

Thompson sampling has been demonstrated both theoretically and empirically to enjoy favorable performance in tackling multi-armed bandits problems.

Despite its successes, however, one key obstacle to its use in a much broader range of scenarios is the need for perfect samples from posterior distributions at every iteration, which is oftentimes not feasible in practice.

We propose a Markov Chain Monte Carlo (MCMC) method tailored to Thompson sampling to address this issue. We construct a fast converging Langevin algorithm to generate approximate samples with accuracy guarantees. We then leverage novel posterior concentration rates to analyze the statistical risk of the overall Thompson sampling method. Finally, we specify the necessary hyperparameters and

the required computational resources for the MCMC procedure to match the optimal risk. The resulting algorithm enjoys both optimal instance-dependent frequentist regret and appealing computation complexity.