Abstract:

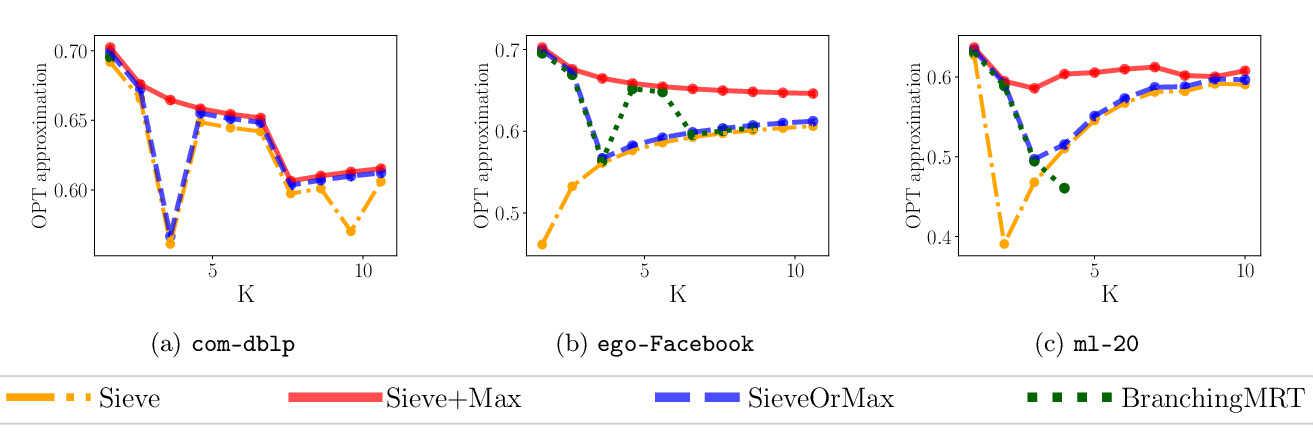

Recently there has been increased interest in using machine learning techniques to improve classical algorithms.

In this paper we study when it is possible to construct compact, composable sketches

for weighted

sampling and statistics estimation according to functions of data

frequencies. Such structures are now central components of

large-scale data analytics and machine learning pipelines. Many common

functions, however, such as thresholds and

$p$th frequency moments with $p>2$, are known to require polynomial

size sketches in the worst case. We explore performance beyond the

worst case under two different types of assumptions. The first is

having access to noisy \emph{advice} on item frequencies. This

continues the line of work of Hsu et al.~(ICLR 2019), who assume

predictions are provided by a machine learning model.

The second is providing guaranteed performance on a restricted class of

input frequency distributions that are better aligned with what is

observed in practice. This extends the work on heavy hitters under Zipfian distributions in a seminal paper of Charikar et al.~(ESA 2002).

Surprisingly, we show analytically and empirically that ``in practice'' small polylogarithmic-size sketches provide

accuracy for ``hard'' functions.