Abstract:

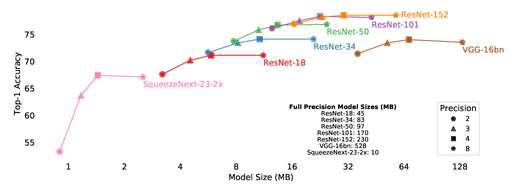

Network quantization is essential for deploying deep models to IoT devices due to the high efficiency, no matter on special hardware like TPU or general hardware like CPU and GPU. Most existing quantization approaches rely on retraining to retain accuracy, which is referred to as quantization-aware training. However, this quantization scheme assumes the access to the training data, which is not always the case. Moreover, retraining is a tedious and time-consuming procedure, which hinders the application of quantization to a wider range of tasks. Post-training quantization, on the other hand, does not have these problems. However, it has only been shown effective for 8-bit quantization due to the simple optimization strategy. In this paper, we propose a Bit-Split and Stitching framework for lower-bit post-training quantization with minimal accuracy degradation. The proposed framework are validated on a variety of computer vision tasks, including image classification, object detection, instance segmentation, with various network architectures.