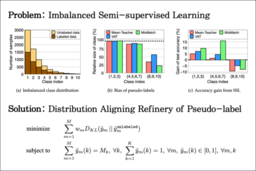

Abstract:

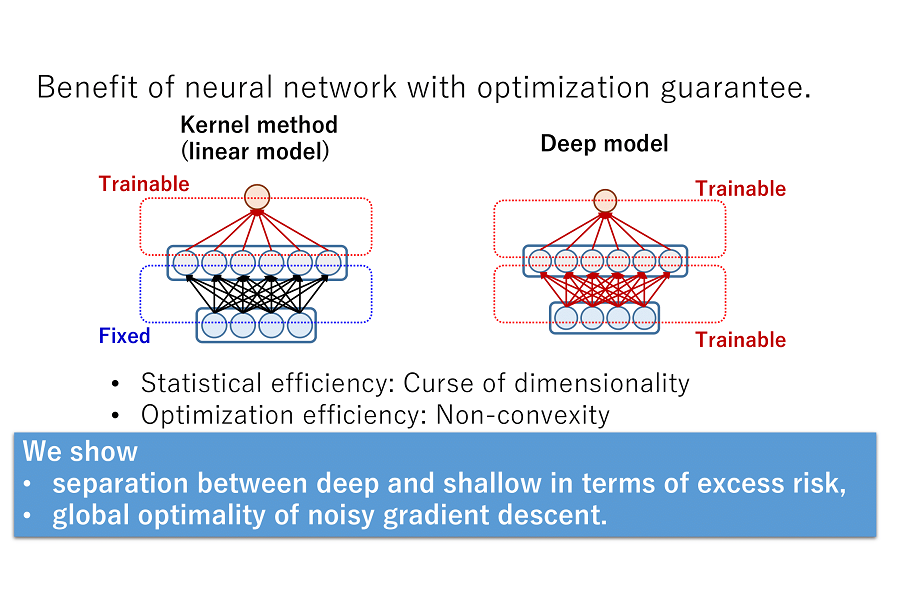

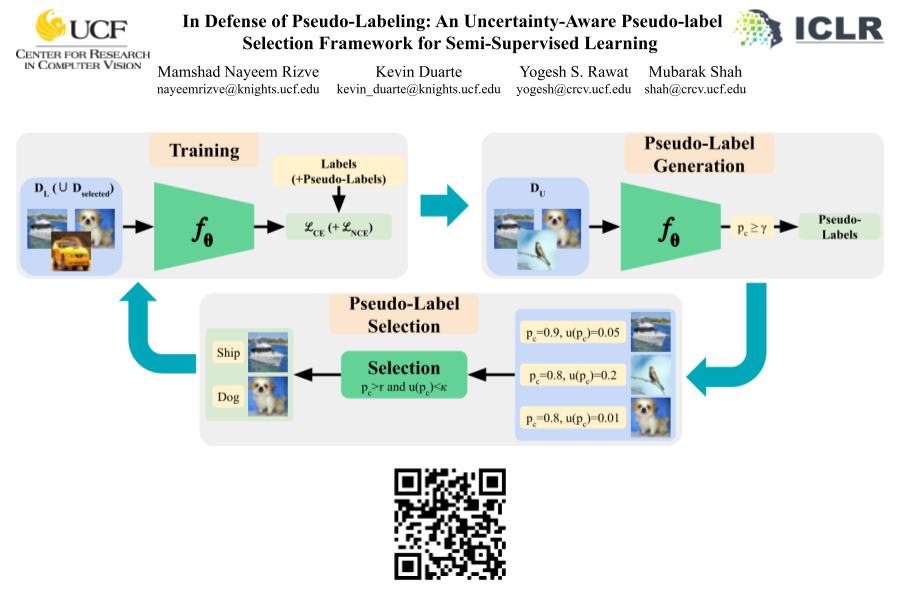

Deep semi-supervised learning (SSL) has been shown very effectively. However, its performance is seriously hurt when the class distribution is mismatched, among which a common phenomenon is that unlabeled data contains the classes not seen in labeled data. Efforts on this aspect remain to be limited. This paper proposes a simple and effective safe deep SSL method to alleviate the performance harm caused by it. In theory, the result learned from the new method is never worse than learning from merely labeled data, and it is theoretically guaranteed that its generalization approaches the optimal in the order $O(\sqrt{d\ln(n)/n})$, even faster than the convergence rate in supervised learning associated with massive parameters. In the experiment of benchmark data, unlike the existing deep SSL methods which are no longer as good as supervised learning in 40\% of unseen-class unlabeled data, the new method can still achieve performance gain in more than 60\% of unseen-class unlabeled data. The proposal is suitable for any deep SSL algorithm and can be easily extended to handle other cases of class distribution mismatch.